- NEWS

- SHOP

- MENU

- Best Prices

- Collections

- Bundles

- All models and scenes

- All scenes

- Animals

- Appliances

- Architectural elements

- Characters

- Electronics

- Food

- Furniture

- Greenery and plants

- Lighting

- Props and gadgets

- Sport & hobby

- Textiles

- Transportation

- Materials & textures

- Software

- Free Products

New articlesVIEW ALL ARTICLES Removing LUTs from Textures for better resultsRemove the LUT from a specific texture in order to get perfect looking textures in your render.

Removing LUTs from Textures for better resultsRemove the LUT from a specific texture in order to get perfect looking textures in your render. Chaos Corona 12 ReleasedWhat new features landed in Corona 12?

Chaos Corona 12 ReleasedWhat new features landed in Corona 12? OCIO Color Management in 3ds Max 2024Color management is crucial for full control over your renders.

OCIO Color Management in 3ds Max 2024Color management is crucial for full control over your renders. A look at 3dsMax Video SequencerDo you know that you can edit your videos directly in 3ds Max? Renderram is showing some functionalities of 3ds Max's built in sequencer.

A look at 3dsMax Video SequencerDo you know that you can edit your videos directly in 3ds Max? Renderram is showing some functionalities of 3ds Max's built in sequencer. FStorm Denoiser is here - First ImpressionsFirst look at new denoising tool in FStorm that will clean-up your renders.

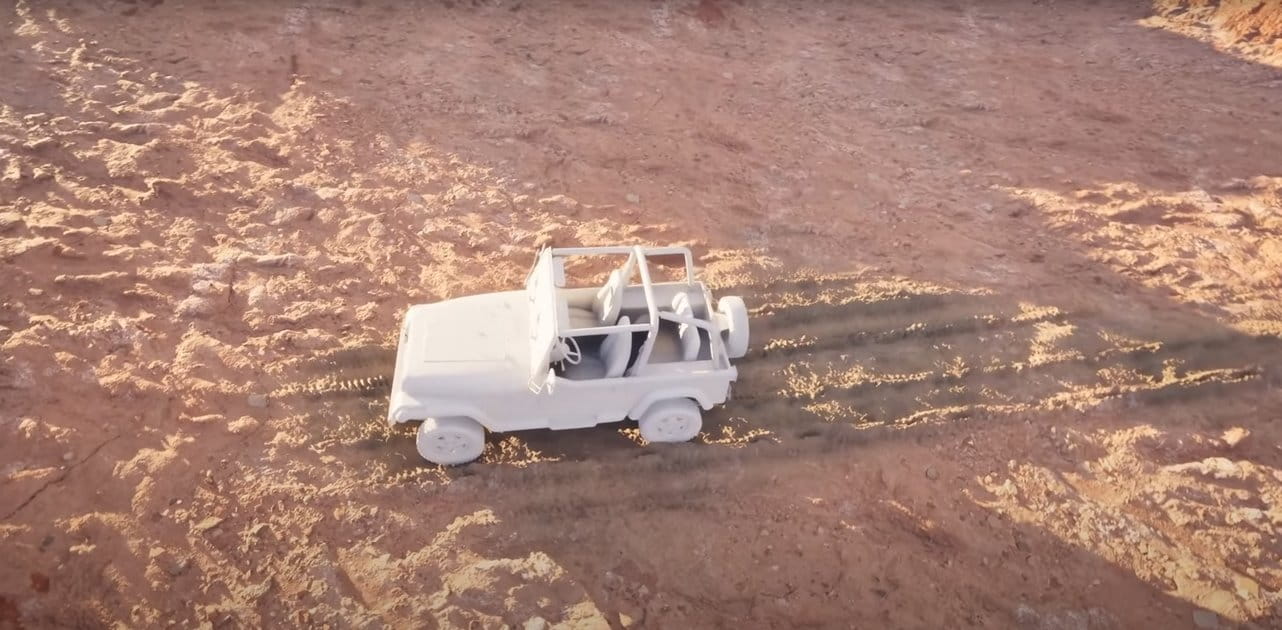

FStorm Denoiser is here - First ImpressionsFirst look at new denoising tool in FStorm that will clean-up your renders. Unreal Engine 5.4: Nanite Tessellation in 10 MinutesThis tutorial takes you through creating a stunning desert scene, complete with realistic tire tracks, using Nanite for landscapes, Gaea for terrain sculpting, and an awesome slope masking auto material.

Unreal Engine 5.4: Nanite Tessellation in 10 MinutesThis tutorial takes you through creating a stunning desert scene, complete with realistic tire tracks, using Nanite for landscapes, Gaea for terrain sculpting, and an awesome slope masking auto material.

Search

Cart

Sign in

-

Log in

Don't have an account yet?

Sign upCreate new accountLog in Customer zone

Your special offers

Your orders

Edit account

Add project

Liked projects

View your artist profile

-

Dark mode✔

Shop

- Best Prices

- Collections

- Bundles

- All models and scenes

- All scenes

- Animals

- Appliances

- Architectural elements

- Characters

- Electronics

- Food

- Furniture

- Greenery and plants

- Lighting

- Props and gadgets

- Sport & hobby

- Textiles

- Transportation

- Materials & textures

- Software

- Free Products

COMMENTS